Cannot run multiple SparkContexts at once is an error message encountered when attempting to create multiple SparkContexts within the same JVM. This error arises due to the singleton nature of SparkContext, which ensures that only one SparkContext exists per JVM.

Understanding the root causes and implications of this error is crucial for effective Spark application development.

SparkContext serves as the entry point to Apache Spark’s functionality, providing an interface for creating RDDs, initializing Spark configurations, and managing cluster resources. Its lifecycle involves creation, initialization, and shutdown, and creating multiple SparkContexts concurrently can lead to resource conflicts and unpredictable behavior.

Error Explanation

The error message “cannot run multiple SparkContexts at once” occurs when an attempt is made to create or initialize more than one SparkContext within the same JVM (Java Virtual Machine). SparkContext is the entry point to Apache Spark, and it manages the Spark application’s execution environment and resources.

The root cause of this error is that SparkContext is a singleton object, meaning that only one instance of it can exist within a single JVM. This design decision ensures that all Spark operations and computations are executed within a single, consistent execution context, preventing conflicts and data inconsistencies.

Context and Background: Cannot Run Multiple Sparkcontexts At Once

SparkContext is the fundamental component of Apache Spark that initializes and manages the Spark application’s execution environment. It provides access to Spark’s core functionalities, such as data loading, transformations, actions, and cluster management.

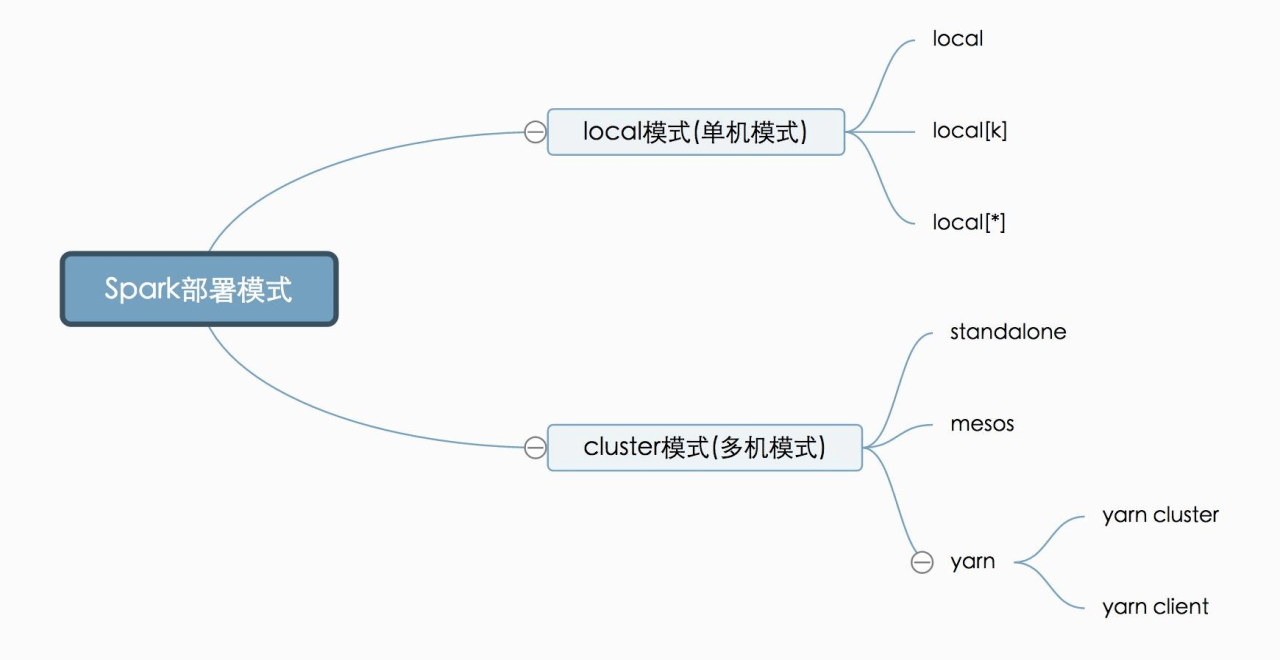

SparkContext encapsulates the application’s configuration, including the cluster mode (local, standalone, or YARN), resource allocation, and other runtime parameters. It also manages the communication and coordination between the driver program and the worker nodes in a distributed cluster.

SparkContext Lifecycle

![]()

The lifecycle of a SparkContext consists of the following stages:

- Creation:SparkContext is created using the

SparkContextconstructor, which takes a SparkConf object as an argument. The SparkConf object contains the application’s configuration parameters. - Initialization:After creation, SparkContext initializes the execution environment by establishing connections to the cluster nodes, allocating resources, and setting up the necessary data structures.

- Execution:Once initialized, SparkContext coordinates the execution of Spark jobs, which consist of transformations and actions applied to RDDs (Resilient Distributed Datasets).

- Shutdown:When the application is complete, SparkContext is shut down, releasing resources and terminating connections to the cluster nodes.

It’s crucial to note that creating multiple SparkContexts concurrently can lead to conflicts and data inconsistencies due to the singleton nature of SparkContext.

Troubleshooting and Resolution

To resolve the error “cannot run multiple SparkContexts at once”, the following steps can be taken:

- Ensure Single SparkContext:Verify that only one SparkContext is created within the JVM. Multiple SparkContexts should be avoided to prevent conflicts.

- Proper Shutdown:Ensure that the existing SparkContext is properly shut down before creating a new one. Calling

SparkContext.stop()releases resources and ensures a clean shutdown. - Separate JVMs:If multiple Spark applications need to run concurrently, each application should be executed in a separate JVM to avoid the singleton conflict.

Alternative Approaches

In certain scenarios, it may be necessary to run multiple Spark jobs concurrently without encountering the singleton conflict. Here are some alternative approaches:

- SparkSession:SparkSession is a newer API introduced in Spark 2.0 that provides a unified interface for creating and managing Spark applications. It encapsulates the functionality of SparkContext and other Spark components, allowing multiple SparkSessions to be created within the same JVM.

- Separate JVMs:Running multiple Spark applications in separate JVMs is a reliable approach to avoid the singleton conflict. Each application can have its own SparkContext without any interference.

- Yarn Client Mode:In Yarn client mode, the driver program runs on the client machine and manages multiple Spark applications concurrently. Each application has its own SparkContext, but they share the same YARN cluster resources.

The choice of approach depends on the specific requirements and constraints of the application.

Code Examples

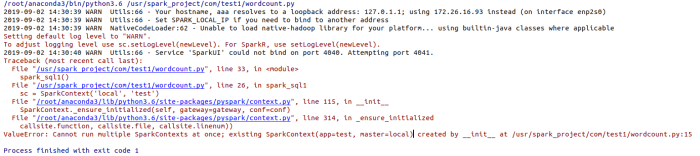

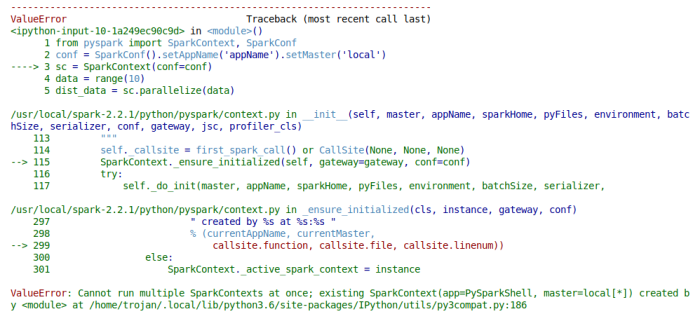

The following code snippet demonstrates the error “cannot run multiple SparkContexts at once”:

import org.apache.spark.SparkContextobject MultipleSparkContexts def main(args: Array[String]): Unit = // Create the first SparkContext val sc1 = new SparkContext() // Attempt to create a second SparkContext val sc2 = new SparkContext() // This will throw an error: "cannot run multiple SparkContexts at once"

To resolve this error, ensure that only one SparkContext is created within the JVM. The following code snippet demonstrates a correct approach:

import org.apache.spark.SparkContextobject SingleSparkContext def main(args: Array[String]): Unit = // Create a SparkContext val sc = new SparkContext() // Use the SparkContext to perform operations // ... // Shutdown the SparkContext when finished sc.stop()

Additional Considerations

In addition to the error message “cannot run multiple SparkContexts at once”, there are other factors that can contribute to SparkContext-related issues:

- Incorrect Configuration:Ensure that the SparkConf object used to create the SparkContext is properly configured with the desired settings.

- Resource Allocation:Verify that sufficient resources are allocated to the Spark application to avoid resource starvation.

- Cluster Health:Monitor the health of the Spark cluster to ensure that all nodes are functioning correctly and there are no network issues.

Optimizing Spark applications to avoid the “cannot run multiple SparkContexts at once” error and other issues involves proper resource management, efficient code execution, and careful consideration of the application’s requirements.

Query Resolution

Why does the error “cannot run multiple SparkContexts at once” occur?

This error occurs when multiple SparkContexts are created within the same JVM. SparkContext is a singleton object, meaning only one instance can exist per JVM.

How can I resolve this error?

To resolve this error, ensure that only one SparkContext is created per JVM. If multiple Spark jobs need to run concurrently, consider using different JVMs or leveraging SparkSession.

What are the implications of creating multiple SparkContexts concurrently?

Creating multiple SparkContexts concurrently can lead to resource conflicts, unpredictable behavior, and data corruption. It is recommended to follow best practices and create only one SparkContext per JVM.